Connecting Microsoft Business Central to an Azure Data Lake - Part 1

- Jesper Theil Hansen

- Mar 27

- 4 min read

Updated: Apr 6

Part 1 Scheduling of Export and Sync Part 2 Possible timing clashes/conflicts that can corrupt data Part 3 Duplicate records after deadlock error in BC Part 4 Archive old data

For about a year now, I have been managing a solution for a Client where we, based on the BC2ADLS project, run an Azure Data Lake connected to an On-premise Microsoft Business Central implementation. I thought I would share some of the learnings I've had, and a few tips on how we adapted the solution to fit this customers use case and requirements. This will be in multiple parts, each adressing different aspects of the process.

First, the BC2ADLS solution was first published as a sample by the Microsoft Business Central Dev team, that solution is not actively maintained but can be found here: https://github.com/microsoft/bc2adls

The currently maintained version is managed by Bert Verbeek and the best source for getting started and understanding the solution is to look through the ReadMe and Setup files here : https://github.com/Bertverbeek4PS/bc2adls/ I wont go into detail about the basic setup and implementation here, but there are two different paths you can take for the Azure side of the solution: Azure Data Lake with Synapse or Microsoft Fabric.

This implementation and most of the changes and suggestions in these blog posts apply to the Azure Data Lake approach, but can be adapted for Fabric if you wish to.

First challenge : Scheduling and Synchronization

The BC2ADSL solution has 2 primary sides to it :

Business Central exports data deltas at intervals.

Synapse pipelines merge and consolidate deltas with data lake

For our implementation we had the need to export data from 90 tables to the lake for different consumers to be able to read. But not all data needed to be equally current, some it was fine to synchronize once per 24 hours during the night, but other data was important to have available per hour.

The default BC2ADLS implementation lets you setup a Job Queue Entry to schedule data lake export from BC. As it turned out from our testing, this export was reasonably fast, reliable and didn't affect the running system in any noticeable way. So we chose to setup the export from BC to run at the top of every hour on minute :00. This exports all new data and changes from all the tables configured for export, to the data lake delta folder.

On the Azure side, the consolidation of data is handled by Synapse pipelines, that in turn uses a dataflow and some additional artifacts that are all included in the project. The base solution comes with 3 pipelines:

Where the Consolidation_OneEntity is the one that starts the actual sync. CheckForDeltas looks in the Delta folder for any new delta files for the entity, and if none are found doesn't call the OneEntity flow, saving some processing time. Finally the Consolidation_AllEntities pipeline loops through all the entities set up for export in Business Central, and calls Consolidation_CheckForDeltas for all of them, thus syncing any entity that has new delta available. This is fine, if for example you want to just sync everything once per 24 hours at midnight for example. Then just run Consolidation_AllEntities using a trigger in Synapse that fires at that time every day. Or if you only export a few entities you could create separate triggers at preferred intervals for each entity and trigger Consolidation_CheckForDeltas at times you want the sync to happen for that entity.

BUT, for us - having 90 entities exported, and wanting easier control over how to schedule the syncs, we needed another solution.

Synchronization Groups

We chose to add an additional configuration field to the "ADLSE Table" :

table 82561 "ADLSE Table"

{

Access = Internal;

DataClassification = CustomerContent;

DataPerCompany = false;

fields

{

...

field(6; SyncGroup; Text[32])

{

Editable = true;

Caption = 'Sync Group';

}

}

}This is just a text field that lets me pass information from the setup to the pipelines. Here is an example of different SyncGroups in the setup :

As mentioned, these are just named groups so there is no significance to the 1h name other than being able to use it in Synapse. What we want to achieve here is that Synapse consolidates GLEntry every hour, but Customer only once per 24 hours.

This setting is exported with the schema and added to the deltas manifest file : deltas.manifest.cdm.json for each entity. I made the change in the BC2ADSL module, but you can also just modify the generated manifest file, we actually did that in the beginning using a powershell script.

On the Synapse side, I added a new pipeline that we use instead of Consolidation_AllEntities: Consolidation_SyncGroup. This pipeline takes an additional parameter which is the name of the Syncgroup, and then loops through all entities and executes Consolidation_CheckForDeltas (Which in turn will call Consolidation_OneEntity if there are new deltas) for all entities set to that SyncGroup.

The Consolidate If/Then Activity uses this logic to choose entities :

@equals(item().syncGroup, pipeline().parameters.syncGroup)Running the SyncGroups

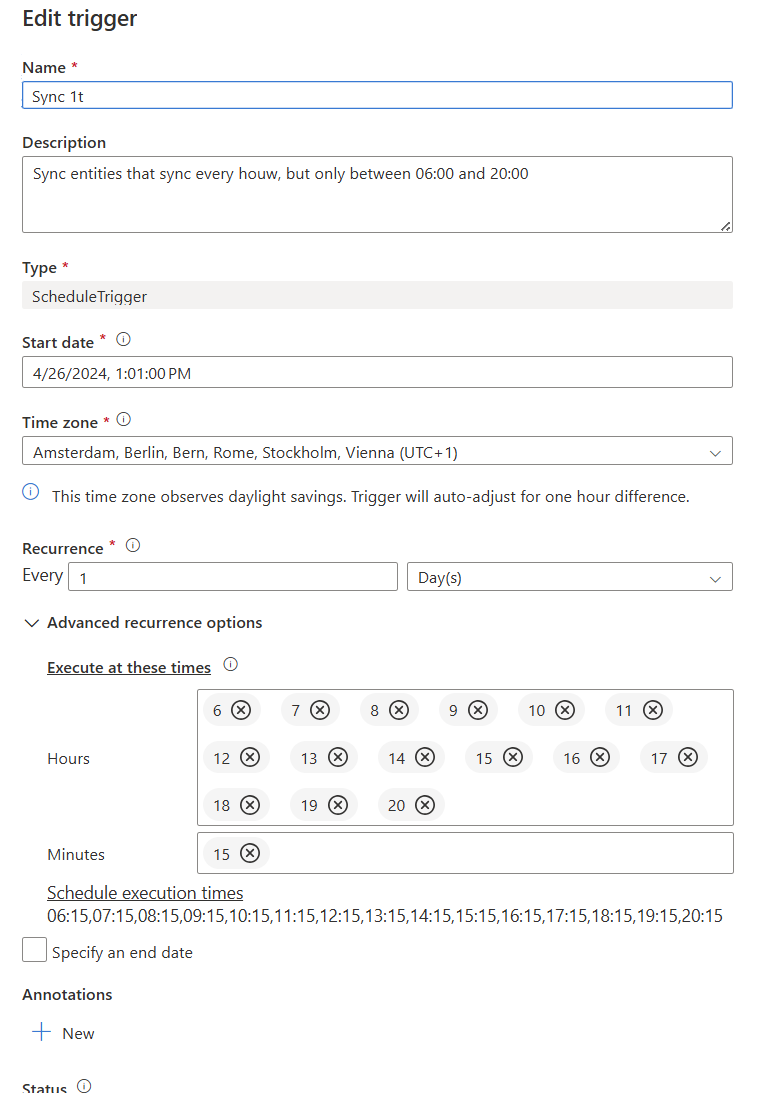

The final piece to the puzzle is to trigger the SyncGroup pipeline at the desired intervals, so I have setup 3 triggers - named the same as the SyncGroups: 1t, 12t and 24t and they are set to trigger with those intervals Here is the configuration for the 1h Sync for example:

Comments