Connecting Microsoft Business Central to an Azure Data Lake - Part 2

- Jesper Theil Hansen

- Mar 28

- 3 min read

Updated: Apr 6

Part 1 Scheduling of Export and Sync Part 2 Possible timing clashes/conflicts that can corrupt data Part 3 Duplicate records after deadlock error in BC Part 4 Archive old data

After going live with the solution and the data lake sync, we ran into a problem which corrupted data in the lake.

Data Export clashes with Pipeline Sync

I believe the setup instructions for BC2ADSL mentions this: It is important to make sure the business central data export doesn't run at the same time the pipeline syncs deltas to the final data in the lake.

As I mentioned in Part 1, we export data from BC every hour at minute :00, so we configured the hourly sync pipelines to run every hour at minute :15. This was to give the BC export ample time to finish before the pipeline ran, at this would give the pipeline 45 minutes to finish before the next export. This worked fine for a while, but at some point we had a case of missing data in the lake.

After some investigation, what had happened was caused by a number of things :

We had added more and more entities to the 1-hour sync Group

We had a relatively small Spark-pool (slower execution)

Pipelines are run in batches, how many you can run per batch depends on your allocation of v-cores.

There was an azure issue affecting performance

A pipeline failed completely, possibly due to a temporary Azure outage

So many factors, resulting in the 1-hour pipelines running for more than 45 minutes, which meant that when BC exported data at the top of the hour, some pipelines was still running and after they finished they deleted all deltas, including the newly exported not synced delta files.

If the pipeline runs for even longer, which happened one time, or when the pipeline simply fails, you could even have a case where the next instance of the trigger fires, and runs the pipeline again while the previous run hasn't finished correctly, this will also corrupt data.

We could of course try to address the different factors resulting in the long running time, and some of them we did, but I wanted to make sure we didn't lose data again if some other factor affected the runtime of the pipelines.

Processing folder and lock file

I made two changes to address this issue. First, I added a simple semaphore-file based lock on each entity's sync pipeline, so a new sync pipeline wont start if another is still running. This change was made in the Consolidation_CheckForDeltas pipeline that looks for the existance of the semaphore file and just exits if it exists. I made sure this is a "Fail" exit so we will get an error in the log and know to investigate the failure. This makes sure the pipeline wont "double-run".

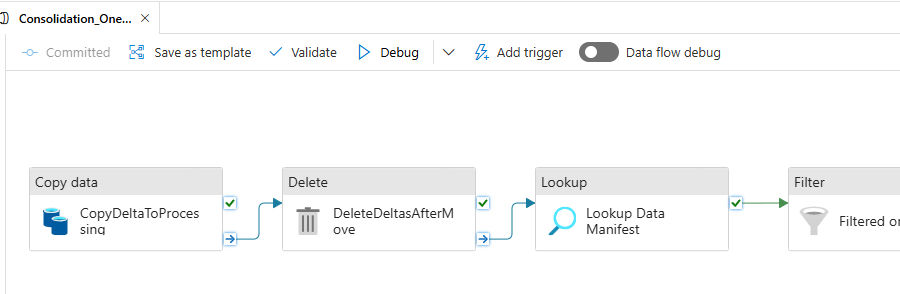

To address the case where BC exports in the middle of the pipeline running, I made a change to the Consolidation_OneEntity. The base implementation reads the delta files from the /deltas folder in the storage account and combines them with the existing data in a /staging folder. After consolidation, the data files built in /staging is then moved back to /data to form the new base for data in the lake.

The change was to start the sync pipeline by moving the deltas to a /processing folder and then let the sync work by reading from that. This lets a new BC export write to delta without mixing up which files has been processed and which hasn't.

The changed Consolidation_OneEntity pipeline now starts like this :

Since the delta-file path is read from the deltas manifest file, I also made a change to pointt to the processing folder when BC generates the schema so it now looks like this for an entity :

"rootLocation":"processing/PaymentTerms-3"So, to summarize, the sync sequence for an entity now is :

Check if semaphore locks is in place, if it is - abandon and fail

Generate semaphore lock

Move deltas to processing, and delete deltas folder content

Perform sync as before but based on deltas in processing folder

Remove delta files in processing folder

Remove semaphore lock file (since placed in processing folder, this is actually automaically removed along with the deltas files)

Since making these changes we have not seen any of these Sync failures

Comments